How not to be mistaken for a chatbot

Top signs from researchers that something was written by AI, and how to avoid sounding like a chatbot yourself.

Listen 14:01

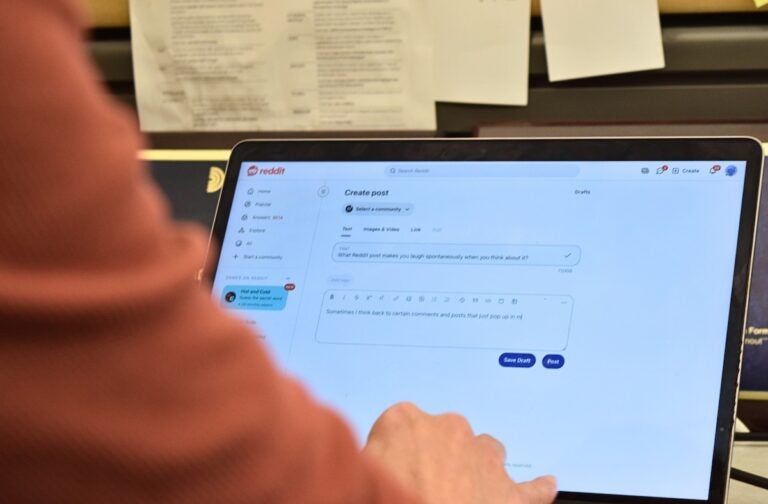

Users on social platforms like Reddit are being accused of using AI to write posts. (Nichole Currie/WHYY)

This story is from The Pulse, a weekly health and science podcast. Subscribe on Apple Podcasts, Spotify, or wherever you get your podcasts.

Find our full episode on the psychological effects of AI here.

Jenny Campbell remembers distinctly the first time she was accused of being a chatbot. She was trawling Reddit, when she came across a question related to mental health. It was serious — so Campbell, who has an unofficial mental health background, immediately switched into counselor mode as she typed up a reply.

“I was like, ‘OK, I’m going to help this person,’” she said, “and I was very professional.”

Maybe a little too professional, she now says — but she was following the formula she’d learned: begin with an affirmation, proceed through the problem, and finish with another positive affirmation.

“ I wrote, like, maybe three paragraphs — because I like helping people and I thought I was being helpful.”

Campbell posted her comment, and, not long after, got a notification that someone had replied. But when she clicked on it, she saw that it wasn’t an expression of gratitude or commiseration, or even anything related to what she’d written. It was an accusation — that the comment had been written by AI.

“That was kind of hurtful,” Campbell said. “Because when you think about helping someone, and you’re putting in the thought, you’re putting in the effort, only for them to say, ‘Oh, you’re AI.’ And they don’t even bother reading what you wrote.”

It was the first time Campbell was accused of being, or using, AI on Reddit, but it wouldn’t be the last. A look through her profile shows roughly a dozen instances over the past five months of Campbell defending herself against accusations of AI usage by other commenters — accusations that would sometimes veer into harassment.

“Every time I type out something intelligent, I’m accused of being AI,” she commented in one post. “I am still deciding on whether I should take it as a compliment or an insult.”

Although commenters seldom offered justifications for their accusations, Campbell speculates that they stem from the fact that she’s always been a decent writer — in part, because she had to be. Campbell is deaf, which means that, as a kid, she really struggled to communicate.

“I had all these thoughts, I had all these ideas, but I couldn’t articulate them,” she said.

Writing became Campbell’s lifeline — and it’s remained a big part of her life, even after getting a cochlear implant. These days, it’s become a sort of hobby for her – writing posts and trading comments in subreddits dedicated to some of her niche interests, ranging from consciousness and enlightenment to Jungian psychology. She tries not to let the accusations get to her — but it can be hard.

“I’m putting in all this work and I’m putting in all this effort — those are my thoughts,” she said. “And then, people [are] telling me that it’s not my thoughts, it’s not my writing. It’s quite frustrating.”

Identifying ‘AI slop’

As ChatGPT and other chatbots have exploded in popularity over the past few years, accusations of AI use have grown along with them. The anti-AI contingent have even developed their own epithet for suspected chatbot content: “AI slop.”

And those accusations have expanded beyond comment sections. They’ve become a hot topic in multiple writing subreddits, with some authors complaining that the obsession with AI has become a witch hunt (and at least one writer apparently suing an accuser). People have reported conflicts with friends, students have faced disciplinary action, and others have even received death threats — all over suspected (or, in some cases, real) AI use.

All of which begs the question — are humans, or even AI detector tools, actually capable of identifying AI writing? And if so, how?

For the most part, research has shown that neither humans nor AI detector tools are especially accurate at differentiating between human-produced and AI-produced writing.

“The majority of studies suggest that people are quite bad at AI detection on average,” says Liam Dugan, a PhD student at the University of Pennsylvania who studies AI-generated content.

Subscribe to The Pulse

However, Dugan adds, his work, together with computer and information science professor Chris Callison-Burch, has shown that there are human experts who are able to detect AI text fairly accurately.

“These experts seem to be those who frequently use AI models and have lots of experience detecting generated text,” Dugan said.

Callison-Burch agrees.

“I don’t think that studies show that people are good at detecting AI in all conditions,” he said. “Instead people think they are good about spotting AI when they know the telltale signs.”

What are the telltale signs of AI?

There are plenty of articles and YouTube videos that purport to identify AI hallmarks — but arguably none are as comprehensive as a recent study by Alex Reinhart, an associate teaching professor of statistics and data science at Carnegie Mellon University.

In February 2025, Reinhart and his colleagues published a study that compared human and AI writing styles in an effort to identify some of the differences between them. To do so, they compiled 12,000 human texts from a variety of genres of 1,000 words or more, gave the first 500 words to a large language model, like ChatGPT and Meta’s chatbot Llama, and asked them to write the next 500 words.

They then studied the chatbots’ output to see if they could pick up on any patterns — and they found some good ones. Take vocabulary, for instance.

“ChatGPT in particular, loves words like ‘underscore’ and ‘intricate’ and ‘camaraderie’ and ‘tapestry,’ and use them more than 150 times more often than humans did,” Reinhart said. “One example we got from ChatGPT, it used ‘camaraderie’ 162 times more often than humans did and ‘palpable’ 95 times more often. And there is a sentence in our dataset where it said, ‘The camaraderie is palpable.’”

Reinhart and his co-authors also found grammatical differences — for instance, large language models tend to eschew the passive voice, but have a preference for certain tenses or verb forms.

“GPT 4.0, the previous version, really loves to use present participle, a grammatical feature, at something like five times more often than humans do,” Reinhart said.

Large language models also seem to prefer using slightly longer words than the average human.

Reinhart’s study didn’t look at formatting or punctuation, but it has become widely accepted across the internet that other signs of AI writing include em dashes, frequent use of subheadings and bold-faced type, emojis, negative parallel structures, and more.

The problem with using these hallmarks to identify AI writing, Reinhart says, is that large language models are changing all the time. He’s already found that some of the patterns he identified in past versions of ChatGPT have shrunk or disappeared in more recent versions — which means that all of the above aren’t reliable ways of detecting AI.

“It does feel like a race to keep up with all of the new models,” he said. “And there are so many companies and open source projects releasing models now that you can’t test them all and you can’t evaluate the style of every one.”

And chatbots aren’t the only ones adapting — humans are too.

Jenny Campbell, for instance, recently resorted to changing her writing style in order to cut down on the accusations. She stopped using em dashes after reading they’re a common hallmark of AI. She even began inserting mistakes into her writing, a move she calls “painful” — but necessary if she wants to avoid more attacks.

“I’m getting tired of defending myself,” she said. “And I know I’m not the only one who’s been accused of being AI. I’ve been hearing a lot about it.”

WHYY is your source for fact-based, in-depth journalism and information. As a nonprofit organization, we rely on financial support from readers like you. Please give today.