Sound it out: the (sometimes creepy) history of the talking machine

Listen

A woman sitting at a VODER talking machine. (Screen grab of youtube video by Mono Thyratron)

The latest iPhones are out in stores. They include lots of new features, a better camera, as well as more from the little voice inside the gadget, Siri.

Similar to products from Amazon and Google, the virtual assistant can now be voice activated with a simple prompt.

How it responds, however, may leave you wanting more.

Artificial voices have come a long way, still, there’s no way you’d mistake Siri’s voice for a human one.

This got us wondering how long inventors have been trying and failing to recreate the thing that is quintessentially ours.

Speaking up

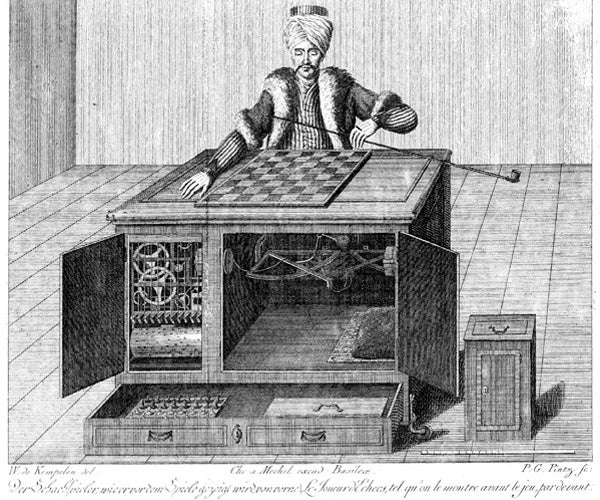

It’s hard to pinpoint the exact start of the quest for a decent artificial voice. We’ll begin, though, in central Europe in 1770 with a mute automaton made of wood and metal. Dubbed The Turk, it consisted of a dummy draped in robes, wearing a turban and attached to a box-like cabinet in place of legs.

The Turk was rolled into palaces and royal courts, where it would play games of chess for the amusement of the upper class.

“It is now pretty well acknowledged that there was a concealed person inside of it, but it was done very ingeniously, so nobody was able to expose it at the time,” says Patrick Feaster, a historian who studies the culture and preservation of early sound at Indiana University, Bloomington.

Inside the cabinet, a hidden chessmaster manipulated a complicated series of levers, controlling The Turk’s one moveable arm, which advanced the chess pieces.

A copper engraving of the Turk, showing the open cabinets and working parts. A ruler at bottom right provides scale. (Public domain,wikimedia commons)

Dignitaries across the continent somehow fell for this ruse. Over the years The Turk was able to checkmate both Napoleon Bonaparte and Ben Franklin.

The inventor behind the prank was Wolfgang von Kempelen, and to help sell the device, the Hungarian began working on another invention: a “speaking machine.”

“I suspect that one of the reasons for it may have been to simulate speech to be used as part of the chess player,” Feaster says.

“Ideally, speech that would sound a little bit inhuman so that it would make the hoax all the more convincing.”

Von Kempelen’s speaking device employed bellows as a stand-in for lungs, forcing air through a reed, which would vibrate like a voice box.

“And so to play this device, which was enclosed inside a box, Kempelen would stick his hands through holes in the outside and use his arm to manipulate the bellows,” Feaster says.

Von Kempelen’s machine was complicated to use, and never made it into The Turk. But it did eventually expand its vocabulary, including the words ‘Mississippi,’ ‘Constantinople,’ and ‘schachspieler,’ the German word for ‘chess player.’

The inventor spent decades tinkering with and re-designing his speaking machine, and in 1791, published a book on the mechanisms of human speech.

It’s possible that German inventor Joseph Faber read that text.

“Faber took this thing one step further then Kempelen had,” Feaster says.

“He finally managed to simulate all the speech sounds that he could think of, and then he connected the thing to a keyboard.”

Faber unveiled the Euphonia in the 1840s. He would sit at the device like you would a piano, the bellows and levers and strings dancing under his fingers.

He also rigged a female mask to the Euphonia, so the strange sounds would seemingly pour out of its spooky, motionless face.

“People at the time consistently remarked on the fact that it sounded very, very monotonous … and this was very unsettling for people to hear,” Feaster says. “Nobody had ever really heard speech like that before. In fact, our modern notion of what robotic speech sounds like could probably be traced back to that point.”

A new approach

In the spring of 1890, Thomas Edison’s Talking Dolls brought real speech into the machine for the first time. Instead of producing sound, they played back recorded sound.

The dolls stood about 2 feet tall with a pretty blue dress and blond hair. There was a tiny phonograph hidden inside. Kids (rich kids by the way, because the dolls were not cheap) would wind the device to hear a collection of nursery rhymes.

One of Edison’s talking dolls. (Kai Schreiber/Flickr)

“Difficulty was, though, the machines could easily get out of order … they didn’t really work all that well,” Feaster says. “It was really an effort to use the technology for something it really wasn’t quite equipped to do yet.”

It wasn’t just that the Edison dolls didn’t work well. The bigger issue was that when you did wind the device at the exact right speed, this is what came out:

The Talking Doll was perhaps a bit ahead of its time, but that theme played well at the 1939 World’s Fair in New York, where the slogan was ‘Dawn of a New Day.’

During the fair’s run, more than 44 million people came to the borough of Queens in New York to view exhibits, and companies competed to impress the audience.

Bell Laboratories tried to trump everyone with its futuristic machine, the Voder.

“What people like Faber and Kempelen were doing was trying to create speech by controlling the vocal tract,” says Philip Rubin, CEO Emeritus of the Haskins Laboratories. Researchers at the lab study speech, language and reading.

“What happened with the Voder, it tried to do everything electronically,” Rubin says.

An operator played the Voder like a piano and used keys to activate filters and shape tones. The device wasn’t easy to manipulate, but it was closer in terms of rhythm and pitch to the way humans speak.

“It wouldn’t give people nightmares in quite the same way. It sounded like a much friendlier robot,” Feaster says.

Voder marked the start of the modern race for better talking gadgets. As processing power continued to advance, computers were soon synthesizing and creating voices, occasionally in the form of song.

In the decades since, the best talking programs have swung away from computer-generated sound, and instead they use human voices as their raw ingredient.

The talking software of today starts with an actor in a studio. He or she reads out a collection of words and sentences that get broken down into phonemes, the most basic elements of language. Once isolated, phonemes can be recombined to form, in theory, any possible word or sentence.

Apple’s Siri, arguably today’s most omnipresent talking machine, is believed to rely on this method of sound generation.

But it and many other artificial voices still fall short of stressing the correct word or words, denying the speech any emotion.

Even after hundreds of years of effort, the divide between the real and the simulated, between us and machines, remains audible.

“It is only recently that speech synthesis has found a real commercial range of applications,” says Mark Liberman, a linguist at the University of Pennsylvania, who began working on speech synthesis programs in the 1970s.

Money perhaps drove von Kempelen, Faber, Edison and others toward a better speaking machine, but Liberman believes there’s far greater financial reward on the line for today’s voice innovators.

Patrick Feaster from Indiana University says bridging the gap between human and artificial speech isn’t just about money.

“There will always be two groups of people, I think. One that likes to point to certain things that machines will never ever ever be able to do, and to value human beings more because of it,” he says.

“And then, people who will look to those precise same things, and look at those as the biggest challenges.”

WHYY is your source for fact-based, in-depth journalism and information. As a nonprofit organization, we rely on financial support from readers like you. Please give today.